Welcome to my series of blogs on a very interesting but complex topic “Generative AI.” There are numerous components and specifications present in the topic, which makes it very difficult to grasp at once. Therefore, this series will explain exactly one topic in every blog. Let’s Start!

This blog will be talking about the model components of Generative Neural Networks (GANs) which are a basic but fascinating area of the Generative AI domain. It is crucial to understand it as the first and foremost step in the world of Generative AI. GAN is an unsupervised learning technique where training data full of images is added as an input with no labels. Let’s deep dive into the model Architecture.

What Is Generative AI?

It is a subset of Artificial Intelligence that can generate new data based on the input data. It is able to identify patterns and structure in the data thereby able to generate fake data based on it which looks realistic. There are various applications of the Generative AI:

- Image and Video Generation

- Text Generation

- Data Augmentation

- Speech and audio synthesis

Model Architecture

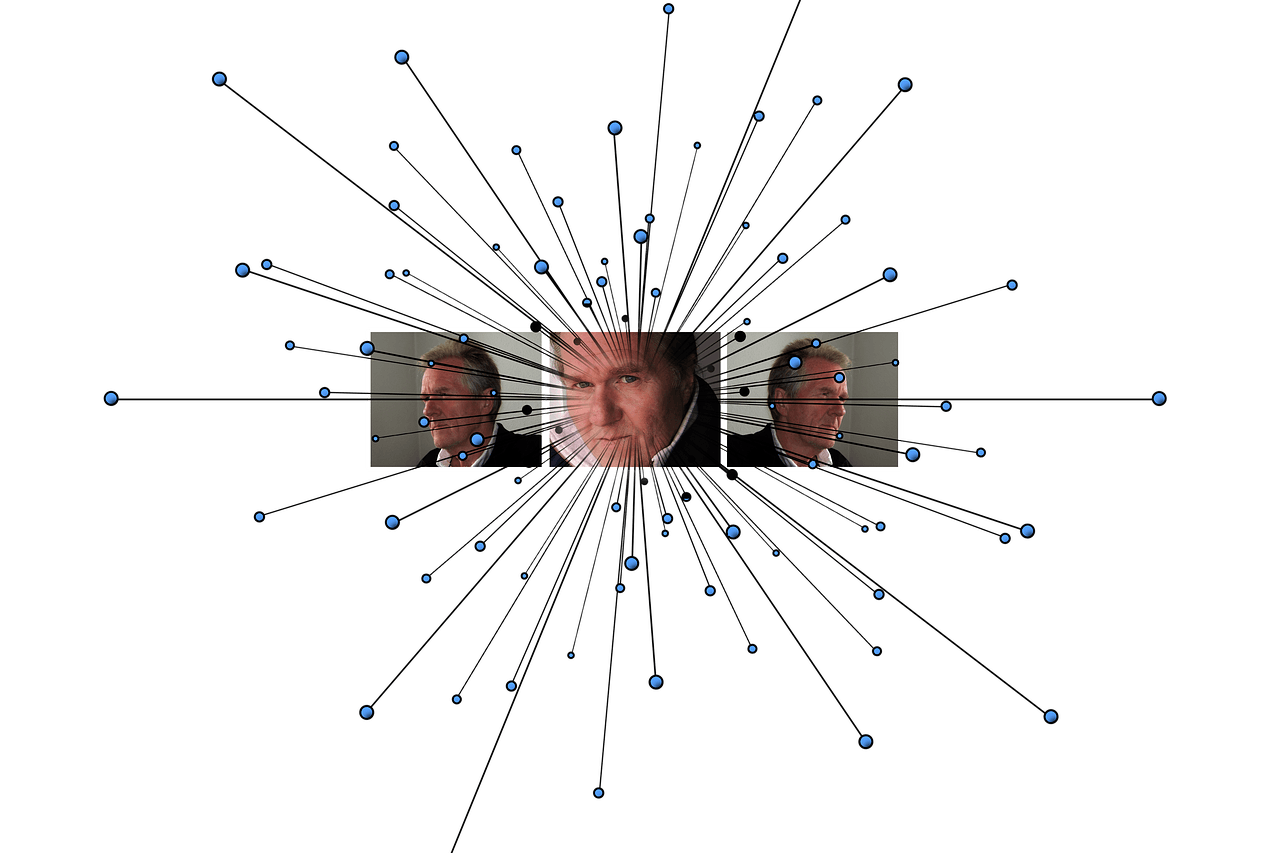

GANs model is composed of two components: Generator and Discriminator. These two components compete with each other to optimize the corresponding parameters. The model is provided with some real images, however, to make the discriminator better and better, the generator creates certain fake images as well. In the below example, the Fake label is represented by 0 and the Real label is represented by 1.

Generator:

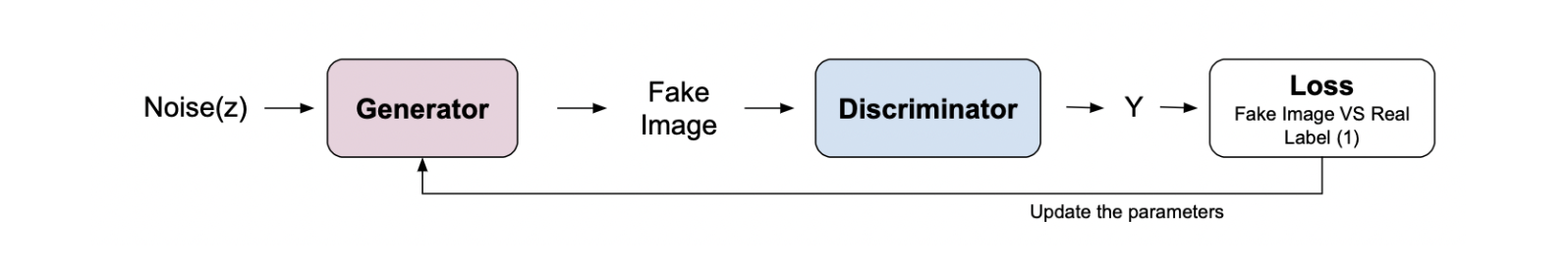

The job of the generator is to take random noise and create fake images out of it. Then these fake images are transferred to the Discriminator, and the Discriminator then releases the probability of it being a real one. Then the difference between the real and fake images is utilized to train the generator model so that it can deceive the discriminator.

Step-by-step process description:

-

Generate Random Noise: To generate the fake images, a random input is passed to the generator. This input is referred to as the noise. The noise is used as the raw data in creating the fake images.

-

Creation of Fake Images: In the process of creating these fake images, it uses multiple layers of neural networks. The noise is transformed step-by-step into a recognizable image.

-

Send Fake Images to the Discriminator: Once the images are generated, they are passed to the Discriminator, and its job is to identify if the passed image is real (output = 1) or fake (output = 0).

-

Feedback sent back to the Generator: Just like any other neural network, the feedback from the Discriminator is sent back to the generator to adjust image generation to make it more and more difficult for the Discriminator to identify.

Discriminator:

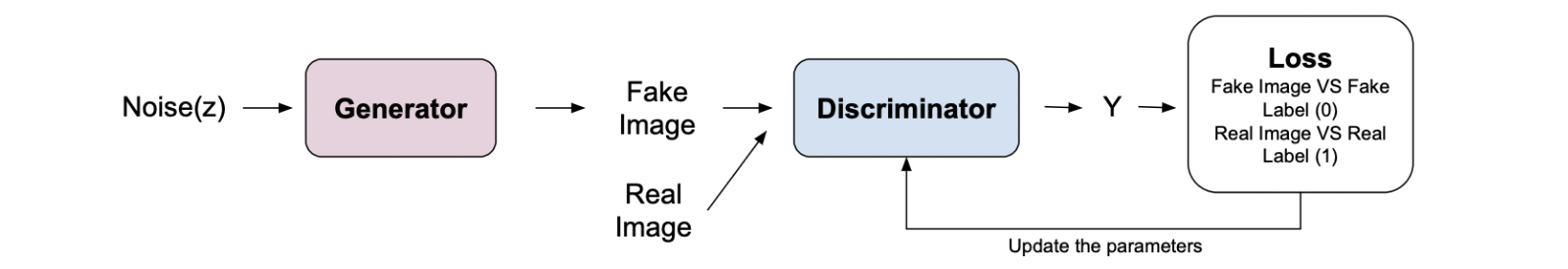

For discriminative training, both fake and real images are supplied to the discriminator model. Then the discriminator model tries to identify if it’s a fake image or a real one. The corresponding loss is used to update the parameters of the discriminator.

Step-by-step process description:

-

Input: The Discriminator receives both the real (from the training data set) and fake (created by generator) images. This mixed batch is used for training.

-

Image Identification: The model uses a dense neural network to identify if an image is real or fake.

-

Calculate Loss based on predictions: Loss is lower if the Discriminator is able to identify the fake and real images correctly. However, it keeps on increasing as the number of wrong identifications increases.

-

Parameter update: The above-calculated loss is back-propagated and used to update the Discriminator model parameters. This is done by using various loss optimization algorithms like gradient descent, etc.

The Adversarial Process

The back-and-forth process between Generator and Discriminator is a very powerful concept, that makes GANs unique and effective. The concept is derived from Game Theory and is depicted as a zero-sum game implying one model’s loss is the other model’s gain. In the process, both models improve leading to highly realistic fake images and a highly accurate discriminator.

But how is equilibrium obtained in this war? OR How do we identify that the model is well-trained?

The answer is simple. The original task of the GANs is to generate realistic images. Therefore, it is attained in the situation when the generator is capable of generating images such that the Discriminator is not able to identify between real and fake more than a random guess.

This section ends here; another one will be coming soon!